Articles

Fusion Power Plants Won't Happen Anytime Soon

By Daniel L. Jassby (retired from Princeton Plasma Physics Lab)

“Common sense ain’t common.” —Will Rogers

In the last five years hundreds of journalists, editorialists and government policy makers have apparently fallen victim to the salesmanship of fusion energy promoters. They have succumbed to the propaganda line that essentially all the scientific issues of fusion power have been settled, and only residual engineering problems need to be resolved. Other proponents assure us that even the engineering issues are in hand, and the implementation of fusion power requires only ensuring a robust industrial supply chain and accommodating nuclear safety regulations.

What is the reality of a fusion energy source? For 75 years national governments have funded programs to achieve terrestrial fusion energy. That period has been bookended by two versions of the only technique that has proved capable of igniting a thermonuclear burn, namely, implosion of a fusion fuel capsule driven by soft X-ray ablation of the capsule surface. The principal difference in the two versions was the source of the X-rays: A 200-terajoule fission explosion in the Ivy Mike (“H-bomb”) shot of 1952 [1], and a 2-megajoule laser pulse in Lawrence Livermore’s NIF (National Ignition Facility) in 2022 [2]. Despite the 8 orders of magnitude difference in supplied energy, the X-ray pulse length (3 to 10 ns) and effective black-body “temperature” (300 eV in NIF and 1,000 eV in MIKE) were remarkably similar.

The achievement of ignition and propagating thermonuclear burn in the NIF has been called a “Kitty Hawk” moment for fusion R&D, but a better analogy is the first manned moon landing in 1969. Within a few years after the Wright Brothers’ first flights, dozens of fliers in the US and Europe made even more impressive demonstrations, but in half a century no entity has been able to repeat NASA’s 1969-72 lunar landings (not even NASA, to date). The NIF results were achieved by a uniquely skilled assemblage of scientists and technologists with highly coordinated support teams of unparalleled capabilities embracing countless disciplines. Those capabilities cannot be readily duplicated, and it will take decades for another laboratory in the US or abroad to replicate NIF’s achievement. There exist large laser-fusion facilities in France, China and Russia, but the fusion output from France’s LMJ is reminiscent of the US’s ICF performance in the 1980’s, and no significant results have been reported from China’s SG-III or Russia’s UFL-2M.

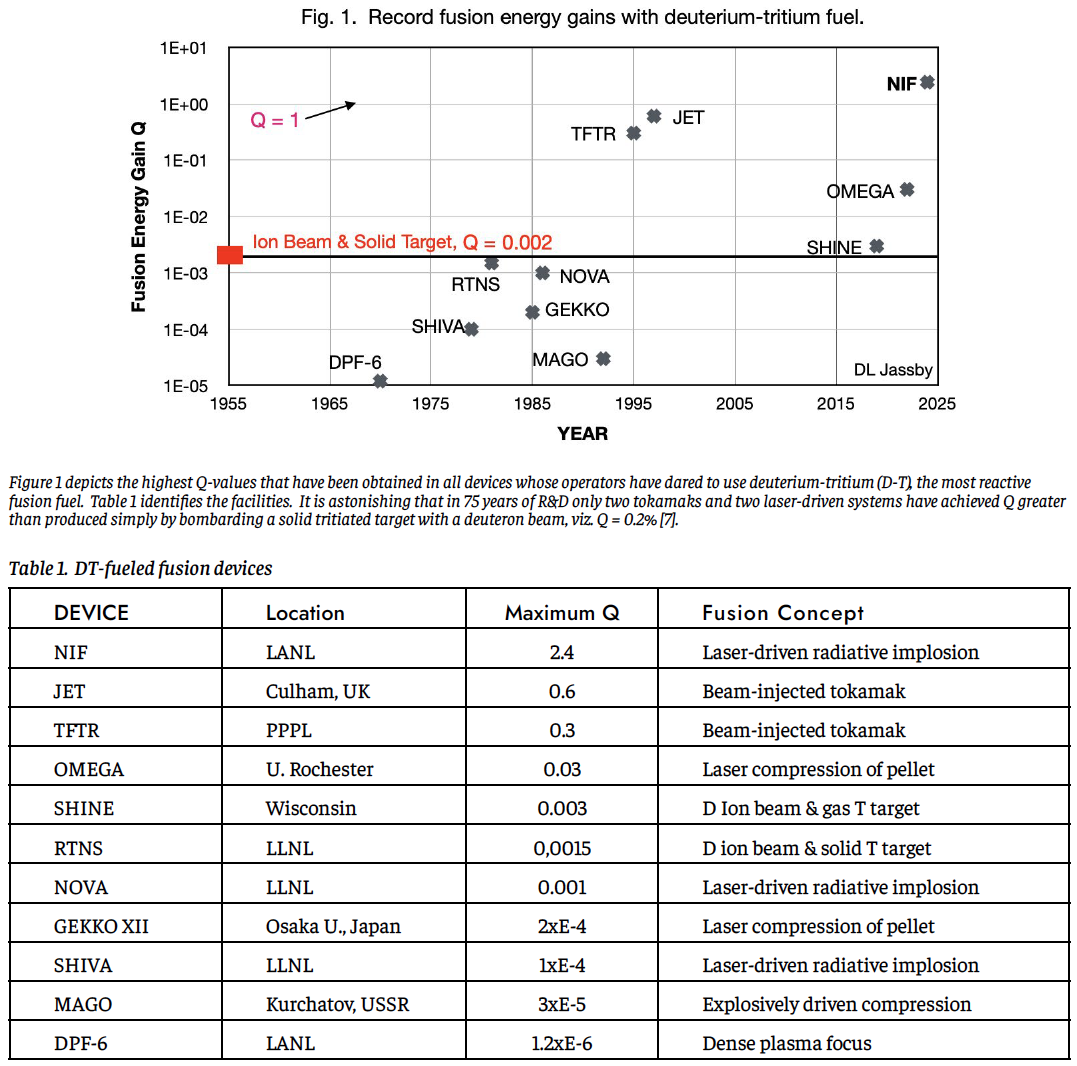

Scientific feasibility remains a formidable challenge

Can any other fusion scheme demonstrate scientific feasibility? For a power-producing reactor, “scientific feasibility” is the ability of the underlying fusioning plasma to reach thermonuclear ignition, or at least demonstrate fusion energy gains of 10 or more. The fusion energy gain, Q, is the ratio of fusion energy output to injected heating energy during a pulse. In radiatively-driven implosion the denominator of Q should logically be taken as the X-ray energy deposited on the fuel capsule. However, it is commonly taken as the 5 to 10 times larger laser energy that produces the X-rays. Consequently, in the first ignition-threshold shot of August 2021, Q was only 0.7, but has since reached 2.4 and the NIF may attain Q = 10 some years hence. Whatever the definition of Q, ignition has occurred when the core of the compressed fuel capsule continues to rise in temperature after the compression phase is complete, as observed in the NIF’s Dec. 2022 shot and numerous later shots [2].

The successful NIF results promise nothing about the feasibility of any other proposed method for controlled fusion. There do exist two other plausible concepts, namely laser compression of a spherical fusion fuel capsule by the laser beams themselves (called “direct drive”), and the magnetically confined tokamak. Using direct drive, the OMEGA facility at the Univ. of Rochester’s LLE has produced 900 J of fusion energy with a 28-kJ laser pulse [3], giving Q = 0.03. Any attempt to reach ignition with direct drive will require a new facility delivering much higher laser energy, a decade-long project to implement.

The leading magnetic confinement concept, the tokamak, has attained a maximum Q of 0.6, but half of that includes beam-thermal reactions, that is, fusion reactions between injected beam ion and plasma thermal ions, which are not scalable to high Q. The highest thermonuclear Q achieved in any tokamak is at most 0.3, nearly two orders of magnitude below the Q = 10 required for a power reactor. There have been no advances in the thermonuclear performance of tokamaks since 1997 [4]. The JET tokamak’s much heralded “record fusion energy pulse” of 59 MJ in 2021 was achieved by injecting deuterium beams into a tritium target plasma [5], and while the total Q was about 0.35, the thermonuclear (non-beam) Q was only 0.08 for that shot [6].

Achieved fusion energy gains

Fusion concepts that have never used tritium can be compared using their operation in deuterium alone. This author made a compilation of record neutron yields in 1979 [8]. Except for tokamaks and laser-driven systems, little has changed in 45 years, with the old records still standing for most pinches of all types, magnetic mirrors, plasma focus, reverse-field configurations and electron-beam-driven systems. Since then, only beam-injected tokamaks, one RF-heated tokamak and one stellarator have achieved Q substantially greater than that produced simply by bombarding a deuterated target with a deuteron beam, viz. Q = 0.001%. The stellarator is the tokamak’s close relation, and has achieved fusion confinement parameters and neutron output a factor of 20 below those of the tokamak’s best. Just two other concepts, the DPF (Dense Plasma Focus) and MagLIF (an imploding liner) have achieved fusion gains in deuterium comparable with the ultra-simple beam/solid-target method. All other magnetic confinement and magneto-inertial concepts that can produce any neutrons are 3 to 6 orders of magnitude behind the tokamak in the vital parameters Q and fusion-neutron output, despite their promoters’ perennial claims of reaching “breakeven next year” (i.e., Q = 1).

In summary, no fusion concept other than radiatively-driven fuel compression has come anywhere close to ignition conditions, and none will for decades, if ever. The SPARC tokamak under construction in Massachusetts [9] may attain Q = 1 by the early 2030’s, and the repeatedly delayed ITER tokamak [10] is supposed to reach Q = 10 in the 2040’s, but there is no certainty of achieving either result. Recently announced delays in the ITER implementation schedule reduce the probability, already marginal, that it will ever become operational [11]. It’s likely that new problems will arise leading to further setbacks in the schedule. If the ITER project collapses, a successful SPARC program will not save the world as its promoters claim, but at least it may save tokamaks from oblivion.

Because of their inability to enhance fusion parameters since 1997, tokamak and stellarator operators in the last decade have concentrated on increasing discharge pulse length, asserting that longer pulse length signifies progress toward power reactor operation. But they report nothing about the variation of D-D neutron output during the pulse, if indeed there is any, and that is the most vital parameter for a reactor. Considering the inescapable adverse interactions between the plasma and first-wall components in toroidal confinement devices, it remains an open question whether fusion production, if any, can be maintained during long pulses.

Resurrecting discarded fusion concepts

As noted above, most fusion concepts reached their maximum possible performance decades ago, yielding Q-values well below that from a simple beam-target system [8]. Many especially hapless schemes can produce no neutrons whatever, and no neutrons means no fusion. In all cases obstacles to improved performance of the underlying plasmas are not merely challenging but insuperable. Nevertheless, private fusion companies are attempting to resurrect many of those zombie concepts to serve as corporate centerpieces.

Promoters claim that the latest supercomputers and artificial intelligence (AI )will now make discarded fusion approaches feasible. While AI and machine learning can process experimental datasets and other information orders of magnitude faster than older methods, these supposed cure-alls for ailing plasmas will not bring recalcitrant fusion schemes into line. AI optimizes performance by processing all existing data, but it can salvage no concept whose optimal performance falls short for physics reasons.

The trumpeted importance of supercomputers and AI is belied by the origins of today’s leading controlled-fusion concepts: Fuel compression driven by soft X-ray ablation. was conceived in 1951 as the Teller-Ulam configuration and successfully implemented in the Ivy Mike device in 1952 with the help of computing machines that were nothing more than glorified desk calculators. The tokamak was conceived and developed in the 1950’s and 1960’s in the Soviet Union with no computer assistance whatever. Both methods originated and evolved with Natural Intelligence (NI) and nothing has yet supplanted them, although a more convenient source of X-rays (the laser) was found for the first method, and new heating methods (particle beams and RF waves) were applied to the second.

Government planners embrace fusion frenzy

Recently, there has appeared a new phenomenon in the “strategic planning” of government energy agencies. National governments have actually begun to believe the preposterous claims of several dozen private firms that they will deliver fusion-based electricity to the grid in the 2030’s. So the governments of the US, UK, Japan and South Korea, among others, have panicked, pushed aside their plans for DEMO’s in the 2040’s or 2050’s, and now aver that they will implement far more ambitious electricity-producing “fusion pilot plants” by 2040.

Accordingly, the two distinct spheres of government-supported labs and private fusion companies have put forward a host of proposed engineering test reactors, demonstration plants, and fusion power “pilot plants” that have absolutely no scientific or technological justification, as they are based on magnetically confined plasmas with high energy gain that nobody has come close to producing and will not for decades, if ever. Devoid of NI but bolstered by AI, the design teams might as well be planning a crewed spaceship voyage to Mars using a Piper Cub.

Beside lack of scientific feasibility, there are two more fundamental show-stoppers: First, every fusion facility consumes megawatts to hundreds of megawatts of electricity, but no device has ever produced even a token amount of electricity (kilowatts) while gorging on megawatts [12]. It may well be decades before anyone can make even that modest a demonstration. Second, 80% of D-T fusion energy emerges as streams of hugely energetic neutrons, but no-one in any line of endeavor — reactors or accelerators —has ever converted neutron barrages into electricity. Apparently nobody can, but every one of the public and private fusion schemes proposes to realize, in a single step from today’s primitive plasma toys, not just electricity production, but net electrical power.

Every one of the public and private grand plans is a castle of sand that can collapse at any time. Some already have crumbled, such as Lockheed’s imaginary compact fusion reactor, General Fusion’s cancelled demo reactor in the UK, and South Korea’s K-DEMO originally to be implemented by 2030. The remaining grandiose fantasies will evaporate like their 20th century predecessors, most gone before the end of the USDOE’s “bold decadal vision.”

Time scale for technology development

Many supposedly reactor-relevant technologies are under development for those fusion concepts that are orders of magnitude away from basic feasibility. Ironically, no reactor technologies have been developed for the single concept (X-ray-induced fuel implosion) that has demonstrated scientific feasibility.

Those missing technologies include a laser or particle driver providing a repetitive 5-ns, 5-MJ pulse with electrical efficiency of at least 10%; the manufacturing and mass production of highly sophisticated fuel targets for about $1 each; means of target injection, tracking and engagement by the incident driver beam; and means of removing target debris and positioning the next target. Most daunting is that all those functions must be performed 20,000 to 200,000 times per day, depending on the size of the fuel capsule and laser energy pulse. That rate contrasts starkly with the present NIF shot rate of just once per day. The target chamber must be protected by a wall of flowing liquid metal or molten salt that can withstand the explosive output of fusion neutrons and radiation and convert this incident energy to electricity.

The assembly of singular technologies required to transform NIF’s unique scientific achievement into a viable power generator will require many decades of innovation and development. This challenge and the improbable prospects for a demonstration of ignition or high Q with any other concept explain why there is no likelihood of a working fusion power pilot plant in the foreseeable future, and perhaps not in this century.

References

Wikipedia entry for “Ivy Mike.”

A. L. Kritcher et al., “Design of the first fusion experiment to reach target energy gain G > 1,” Phys. Rev. E 109, 20521 (Feb. 2024). https:// doi.org/10. 1103/PhysRevE.109.025204

C. A. Williams, et al. “Demonstration of hot-spot fuel gain exceeding unity in direct-drive ICF implosions,” Nature Physics 05 Feb. 2024. https://doi.org/10.1038/s41567-023-02363-2

D. L. Jassby, “The Quest for Fusion Energy,” Inference Vol. 7, No. 1, 2022. https://doi.org/10.37282/991819.22.30

M. Maslov et al 2023, “JET D-T scenario with optimized non-thermal fusion,”Nucl. Fusion 63 112002. https://doi.org/10.1088/1741-4326/ace2d8

Daniel Jassby, “Magnetic Fusion’s Finest is a Glorified Beam- Target Neutron Generator,” APS Forum on Physics & Society, Vol. 51, No. 3, July 2022 (online). https://engage.aps.org/fps/resources/newsletters/newsletter-archives/july-2022

C. E. Moss, et al., “Survey of Neutron Generators for Active Interrogation,” LANL technical report LA-UR-17-23592 (2017). https://indico.fnal.gov/event/21088/contributions/60822/attachments/38056/46206/Survey_of_Neutron_Generators_for_ Active_Interrogation.pdf

D. L. Jassby, “Maximum Neutron Yields in Experimental Fusion Devices,” PPPL report PPPL-1515 (1979). https://digital.library.unt.edu/ark:/67531/metadc1111062/m2/1/high_res_d/6202769.pdf

A. J. Creely et al. “Overview of the SPARC Tokamak,” Journal of Plasma Physics 86, no. 5, 2020. https://doi.org/10.1017/S0022377820001257.

Michel Claessens 2023, ITER: The Giant Fusion Reactor, 2nd Edition (Cham: Springer). https://doi.org/10.1007/978-3-031-37762-4.

Daniel Clery, “Giant international fusion project is in big trouble,” Science, 3 July 2024. https://www.science.org/content/article/giant-international-fusion-project-big-trouble.

Daniel Jassby, “Fusion Frenzy— A Recurring Pandemic,” APS Forum on Physics & Society, Vol. 50, No. 4, October 2021. https://higherlogicdownload.s3.amazonaws.com/APS/a05ec1cf-2e34-4fb3-816e-ea1497930d75/UploadedImages/P_S_OCT21.pdf

Top